Introduction:

In some security/malware chat room, someone posted about an ELF backdoor, at the time, I couldn't find much information about it and any related samples or reports. Few weeks ago, I saw similar sample being discussed on twitter, which was found by a researcher in an open directory.

In this post, I just have some notes on my analysis/research of this sample and related samples. This might help with writing a signature or doing further research. I'm just calling this OpenSSL-1.0.0-fipps backdoor since that's what it initially sends to the C2 server and "fipps" has an extra p.

As far as I'm aware, I haven't found similar samples with the searches I've done and I have not seen any samples successfully connect to C2 on any public sandboxes. I was also not able to find any executables for the C2 with some of the yara rules I wrote and queried Hybrid-Analysis for.

Notes:

It's a reverse shell/backdoor. The binary researches out to the C2 IP and Port defined in the binary. You can find C2 IP by just running strings.

Samples:

MD5 hashes:

eb7ba9f7424dffdb7d695b00007a3c6d VT: First Submission 2022-04-21 18:44:09 UTC, submission name: suspicious

97f352e2808c78eef9b31c758ca13032 VT: First Submission 2022-08-26 22:47:54 UTC, submission name: client

3e9ee5982e3054dc76d3ba5cc88ae3de VT: First Submission 2022-11-04 00:18:27 UTC, submission name: client

Sha256 hashes:

8cd16feb7318c0de3027894323a0ccaacb527e071aa4c4b691feb411b6bd0937

40da2329b2b81f237fc30d2274529e6fda4364516b78b4b88659c572fbc4bc02

4e5e42b1acb0c683963caf321167f6985e553af2c70f5b87ec07cc4a8c09b4d8

C2s:

162.220.10.214

107.175.64.203

185.29.10.38

After doing some historic searches, the C2's were running Windows, not that it matters much.

TELFhash for the binaries is: t1afe0d814d67c0dad4ab20c30d4989a94a047eb2688752922ab98d9c1883d917f15cf5f

File command results & diff:

ELF 64-bit LSB executable, x86-64, version 1 (SYSV), dynamically linked, interpreter /lib64/ld-linux-x86-64.so.2, for GNU/Linux 2.6.18, BuildID[sha1]=16eee120b0a557907a782d1405c8f86415902fa5, stripped

ELF 64-bit LSB executable, x86-64, version 1 (SYSV), dynamically linked, interpreter /lib64/ld-linux-x86-64.so.2, for GNU/Linux 2.6.18, BuildID[sha1]=16eee120b0a557907a782d1405c8f86415902fa5, stripped

ELF 64-bit LSB executable, x86-64, version 1 (SYSV), dynamically linked, interpreter /lib64/ld-linux-x86-64.so.2, for GNU/Linux 2.6.18, BuildID[sha1]=2c46d3c40075dc7a193f8041f9458b40fd1f31cf, stripped

BuildID are the same for eb7ba9f7424dffdb7d695b00007a3c6d and 97f352e2808c78eef9b31c758ca13032 and only diff is the IP.

< 00004110: 0000 0000 0000 0000 3130 372e 3137 352e ........107.175.

< 00004120: 3634 2e32 3033 0067 6574 6966 6164 6472 64.203.getifaddr

---

> 00004110: 0000 0000 0000 0000 3136 322e 3232 302e ........162.220.

> 00004120: 3130 2e32 3134 0067 6574 6966 6164 6472 10.214.getifaddr

Not 100% sure about the reason for this and why someone modified just the IP and why it wasn't recompiled.

eb7ba9f7424dffdb7d695b00007a3c6d was the sample being discussed in a chat room, the user mentioned that the file was dropped after log4j exploitation.

Finally, there is 97f352e2808c78eef9b31c758ca13032 and I'm not sure where it came from. The sample was discovered after searching for the following yara rule on Hybrid Analysis:

rule elf_backdoor_fipps

{

strings:

$a = "found mac address"

$b = "RecvThread"

$c = "OpenSSL-1.0.0-fipps"

$d = "Disconnected!"

condition:

(all of them) and uint32(0) == 0x464c457f

}

(there is also "dbus-statd" that appears in the all the binaries)

There is also a Suricata signature published by Proofpoint/EmergingThreats that exists:

alert tcp any any -> any 443 (msg:"ET MALWARE Malicious ELF Activity"; dsize:<50; content:"OpenSSL-1.0.0-fipps"; startswith; fast_pattern; reference:md5,eb7ba9f7424dffdb7d695b00007a3c6d; classtype:trojan-activity; sid:2036592; rev:1; metadata:affected_product Mac_OSX, affected_product Linux, attack_target Client_Endpoint, created_at 2022_05_12, deployment Perimeter, former_category MALWARE, signature_severity Major, tag RAT, updated_at 2022_05_12;)

The suricata signature above is for initial connection from the backdoor to the c2 server.

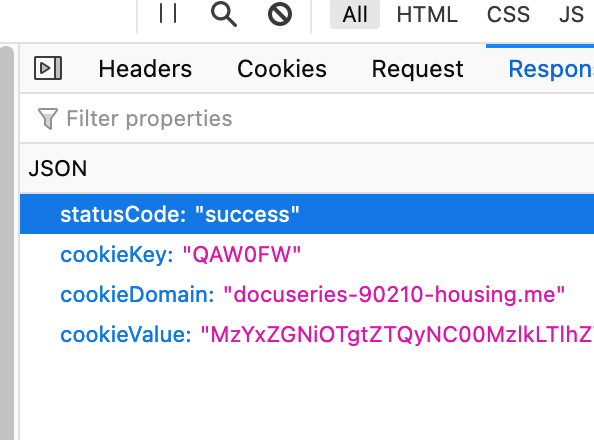

When the sample runs, it prints "!!!Hello World!!!" and the mac address it found, connects to the C2 server, sends OpenSSL-1.0.0-fipps and the mac address.

There also appears to be I guess a heartbeat packet which looks like this:

Processing of commands takes place at FUN_00401f23 (i'm just using names ghidra assigns):

The binary is stripped and I wasn't able to figure out every single function or execute every single function but it has typical backdoor capabilities and it's also able to gather some info and send it back to the C2. There also seems to be encoding of the output (by FUN_00402181 ??) before it gets sent via network.

1: grab user and system info

5: write file?

7: not sure

0xb: delete a file

0xd: directory/file listing?

0xf: not sure

0x11: not sure

0x13: not sure

0x17: seems to return c2 connection info

It does have functions for doing network connections, killing processes, etc...

From doing some testing, the command input that it expects seems to be 16 bytes. The following worked for me for deleting a file:

\x00\x00\x00\x00\x00\x00\x00\x00\x0b\x00 (command) \x05\x00 (input size)\x00\x00\x00\x00delme

0b is the command (byte next to 0b is supposed to the secondary command if that function supports it) and 05 is the number of bytes to read afterwards, delme is 5 bytes.

I'm not sure about the impact of changing other values in that 16 byte input but I know it changes the way the backdoor processes the input & encodes (first 8 bytes).

Conclusion:

It's a weird backdoor that I haven't found much info about or have seen fully run in a sandbox while being connected to its C2.

I assume it's being used after initial access through web/external vulnerability (according to the tweets related to the latest sample, the threat actor had some usernames and active directory info they had taken from an organization they breached) but I'm not sure as there aren't many samples (or reports) I was able to find with the TELFhash and yara rule I made. It's very easy for the threat actor to modify the strings in the binary. I did see some specific assembly instructions that I wrote a yara rules for but they came back with the files that I already had.

Links: